Sunday, March 14, 2010

TADA!

It's done!

Man, this project took a lot of blood, sweat, and tears. I was sick all this week (after a grueling weekend of work on this), but luckily the only task at hand was to apply coats of varnish and wait for them to dry. Tonight I went in to the lab with the finished shell and put in the final 8 hours of cutting the sheet metal back plate, mounting the sensors, wiring the innards, and mounting the feet. I am pleased with the end result. What do you think?

The best part about today was that after I stuffed all the wires in the cavity and barely managed to secure the back plate, I found only two errors in the software that reads the sensors. So I opened her back up and quickly resolved the issue. Now I have a working controller. I say this to stress the importance of prototyping. If you ever want to build something of nontrivial complexity, prototyping is the only way to discover the 'essential' issues. Without that knowledge, you're dead in the water because, believe me, the 'emergent' issues will be laying in wait when you go to do the final build.

Sunday, March 7, 2010

Progress

A LOT of work has gone into the touchboard. From Thursday to Sunday I put in approximately 35 hours. The tasks that got done were: routing/drilling/filing/sanding/varnishing the padauk, re-wiring and heat-shrinking most of the sensor components, redesigning the circuit to accommodate more i/o than the Ardiuno has via a two 4051 multiplexer ICs, redesigning the Arduino firmware, and modifying the Max/MSP patch that receives the serial data from the Arduino. It was a weekend full of sawdust, solder, code, music, and beer. My favorite things :)

Tuesday, March 2, 2010

Building an instrument

Building your own instruments is a lot of fun, and very rewarding. It is also a lot of work and an excellent learning experience.

I'm building a custom controller for myself as part of my 250b (HCI) project. The vision behind the project is to have a very solid and sturdy (think heavy) interface for delicate expressive interaction. The input is achieved with pressure and position sensitive strips mounted to the surface. These sensors will be covered with dense foam to increase the amount of physical travel. The motivation is to explore the effects of such materials as a form of haptic feedback for the performer. The device will also be equipped with a rotary encoder, six LEDs, and four buttons. In the future, it will be expanded with another pressure sensor (double the length of the others) and analog outputs for use with voltage controlled gear such as modular synthesizers. Here are some images of the work in progress.

Modifying an FSR strip

early experiments

First prototype

Second prototype, after getting handy with a router

Piece of Padauk to be used in final rendition

And here's Tom, the fine gentleman who patiently explained all I needed to know about buying hardwood and led me to that beauty of a block (and at a great price)

Wednesday, January 20, 2010

Fun Stuff!

The quarter is already well underway and we hit the ground running, with a rocket strapped our backs. So far I've created some music based on cellular automata, written three iPhone apps, designed a spherical midi controller (images soon to come), and learned a thing or two about physical modeling.

Tuesday, January 5, 2010

I suck at blogging

Well, It's been forever since I updated the blog. I should have at least posted the "sorry for not posting" post some time ago. Anyway, it's irrelevant now (and who the heck other than me reads this, anyway?). So here's what I forgot to blog about:

- The augmented violin project. In 250A (Musical Interaction Design) each student had to submit a final project proposal. Mine would have been a spherical input device replete with encoders, sliders, LEDs, and an accelerometer. However, the final projects were done in groups, so not everyone's project was realized. The one I worked on was an augmented violin, which is a regular violin with electronic sensors added, plus some software to process the sensor data as "gestural input", plus some more software to sonify this gestural data.

- The Insaniac. The final project in 256A (Music, Computing, Desgin). This was a cross between a granular synthesizer and a kinematics simulation, all wrapped up in an aesthetically pleasant, animated, toy/instrument program. In other words, a glorified screen saver.

- The Mini-Instrument. A bunch of electronic sensors and LEDs mounted on a piece of foamboard and connected via perfboard to a breadboard with an arduino-based circuit on it. I played with this thing for hours as an input device to some software designed specifically for it. But I didn't record it! Now, the thing has been destroyed, the parts returning to the Max Lab aether. There is something extremely satisfying about creating something beautiful, not using it, and then destroying it. Ashes to ashes.

- The Mini-Instrument. A bunch of electronic sensors and LEDs mounted on a piece of foamboard and connected via perfboard to a breadboard with an arduino-based circuit on it. I played with this thing for hours as an input device to some software designed specifically for it. But I didn't record it! Now, the thing has been destroyed, the parts returning to the Max Lab aether. There is something extremely satisfying about creating something beautiful, not using it, and then destroying it. Ashes to ashes.

EDIT: It wasn't destroyed after all! Yay!

- The Music Programming Toolkit. AKA MusKit or MTK. Inspired by the Synthesis Toolkit (STK), I wanted to create a class library that can be used to develop music software. What does that mean, exactly? Well, it can mean many things. One the one hand we have STK, which leverages RtAudio and provides a suite of signal processing modules that can be used in any configuration the programmer might conjure. On the other hand we have Juce, a fully-featured cross-platform application development environment, complete with its own audio I/O, graphics engine, IPC, and even plug-in format wrappers. MusKit will fit somewhere in between these two environments. In other words, STK ⊂ MusKit ⊂ Juce. Muskit will provide all the hardware support and signal processing infrastructure as STK, plus a host of classes for connecting DSP modules in a musically meaningful manner (read: sequencing, timing, external control, etc), but it won't go so far as to dictate how your application should be structured. It will still be lightweight enough to easily integrate into a larger framework such as Juce, QT, Cocoa (via obj-c++), etc.

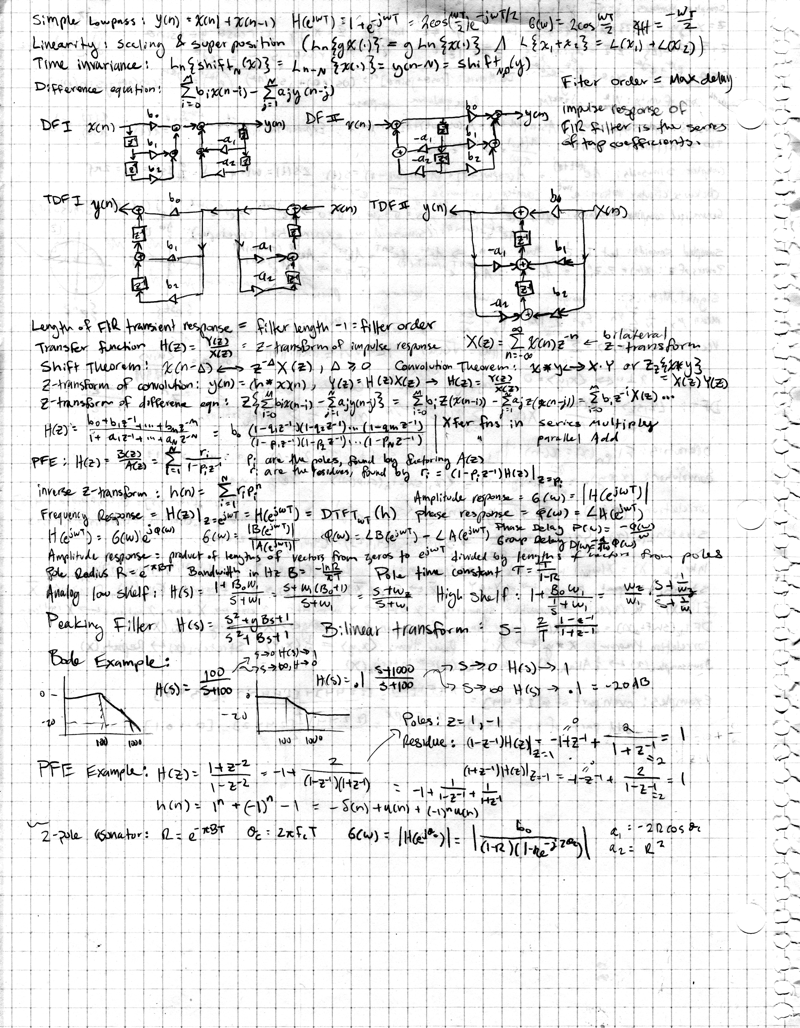

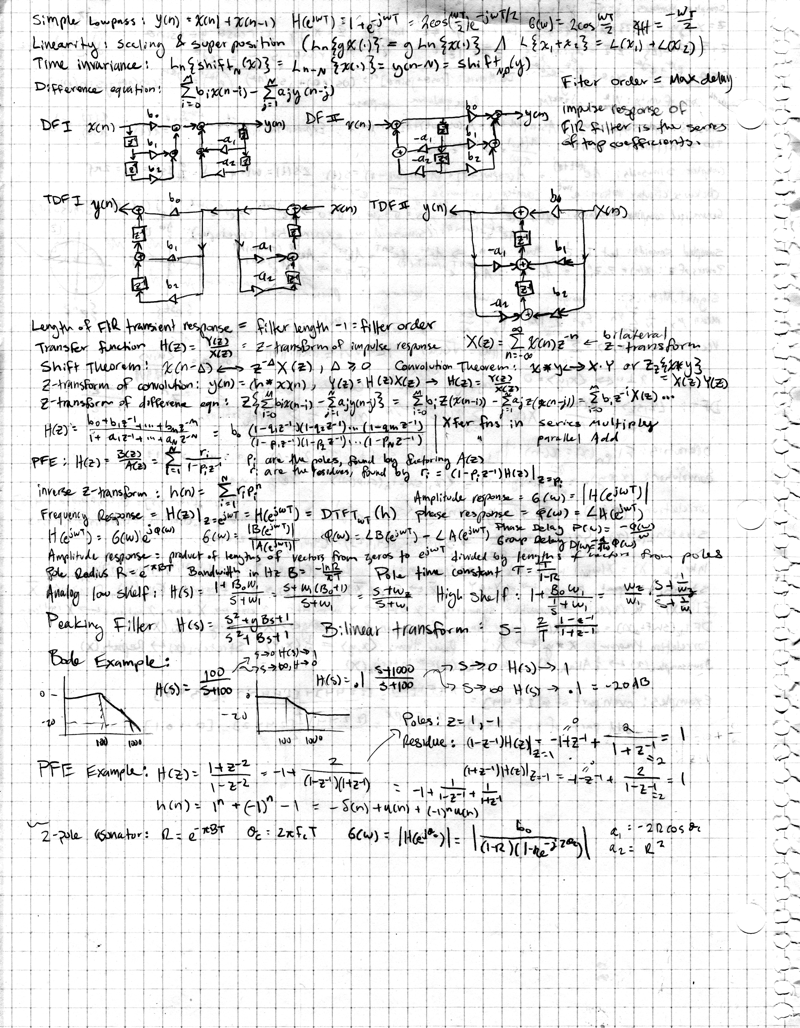

- The DSP final.

'nuff said.

- Lastly, I've applied to the CCRMA PhD program. As only two slots are open for it, odds are I won't get it. But not long into the MA program I realized a few things about myself and my goals:

- The augmented violin project. In 250A (Musical Interaction Design) each student had to submit a final project proposal. Mine would have been a spherical input device replete with encoders, sliders, LEDs, and an accelerometer. However, the final projects were done in groups, so not everyone's project was realized. The one I worked on was an augmented violin, which is a regular violin with electronic sensors added, plus some software to process the sensor data as "gestural input", plus some more software to sonify this gestural data.

- The Insaniac. The final project in 256A (Music, Computing, Desgin). This was a cross between a granular synthesizer and a kinematics simulation, all wrapped up in an aesthetically pleasant, animated, toy/instrument program. In other words, a glorified screen saver.

- The Mini-Instrument. A bunch of electronic sensors and LEDs mounted on a piece of foamboard and connected via perfboard to a breadboard with an arduino-based circuit on it. I played with this thing for hours as an input device to some software designed specifically for it. But I didn't record it! Now, the thing has been destroyed, the parts returning to the Max Lab aether. There is something extremely satisfying about creating something beautiful, not using it, and then destroying it. Ashes to ashes.

- The Mini-Instrument. A bunch of electronic sensors and LEDs mounted on a piece of foamboard and connected via perfboard to a breadboard with an arduino-based circuit on it. I played with this thing for hours as an input device to some software designed specifically for it. But I didn't record it! Now, the thing has been destroyed, the parts returning to the Max Lab aether. There is something extremely satisfying about creating something beautiful, not using it, and then destroying it. Ashes to ashes.EDIT: It wasn't destroyed after all! Yay!

- The Music Programming Toolkit. AKA MusKit or MTK. Inspired by the Synthesis Toolkit (STK), I wanted to create a class library that can be used to develop music software. What does that mean, exactly? Well, it can mean many things. One the one hand we have STK, which leverages RtAudio and provides a suite of signal processing modules that can be used in any configuration the programmer might conjure. On the other hand we have Juce, a fully-featured cross-platform application development environment, complete with its own audio I/O, graphics engine, IPC, and even plug-in format wrappers. MusKit will fit somewhere in between these two environments. In other words, STK ⊂ MusKit ⊂ Juce. Muskit will provide all the hardware support and signal processing infrastructure as STK, plus a host of classes for connecting DSP modules in a musically meaningful manner (read: sequencing, timing, external control, etc), but it won't go so far as to dictate how your application should be structured. It will still be lightweight enough to easily integrate into a larger framework such as Juce, QT, Cocoa (via obj-c++), etc.

- The DSP final.

'nuff said.

- Lastly, I've applied to the CCRMA PhD program. As only two slots are open for it, odds are I won't get it. But not long into the MA program I realized a few things about myself and my goals:

- I have a lot of respect for my professors, and I want to work with them as much as possible.

- I am 5000% more productive than ever before. And I was never a slacker.

- The work I am doing here is the best I've ever done, despite the short duration of each project.

How perfect would it be to have 4-5 more years to follow my pursuits? I would be able to take many classes (in fact, would be required to take at least 135 units), and work on a number of long-term projects.

Don't get me wrong, I want to have a company that makes great software and push the boundaries of what people have come to expect from music technology. But spending all this time at CCRMA, learning so many things, working on so many projects, I'm constantly reminded of one simple fact:

I don't know shit.

Friday, November 13, 2009

Great show last night

Sweat Shop Boys played at CCRMA's fall concert, "A Cagian Music Circus", last night. The event lasted 1.5 hours, with performances happening simultaneously in six areas throughout the building. The free concert drew a crowd of about 150. Our performance took place in the CCRMA stage, a beautiful and intimate concert space (with no actual stage) designed for multichannel electroacoustic music. It's equipped with two rings of 8 ADAM studio monitors and four subwoofers for a grand total of 20 channels of 3-d playback. Sweatshop Boys are only used to playing on stereo rigs, bad ones, in acoustically bad spaces. So this was a welcome change, to say the least! We retooled our effects busses to output four discrete channels, which were diffused to the four corners of the space. Also new for us was a highly receptive crowd of like-minded people. The pic above is the only one I have at the moment, but many high quality pics should be available soon.

Subscribe to:

Comments (Atom)